education

Ph.D., Ecole Centrale de Lyon

M.E., Beijing jiaotong University

B.E.(Educational Technology) & B.A.(English), Beijing Normal University

Research Interests

Virtual Reality,3D learning resources,Educational Applications of Intelligent Interfaces,Cognitive and Emotional States Recognition and Educational Brain-Computer Interfaces,Artificial Intelligence Applications in Rural Classrooms

courses

An Introduction to Python Programming

HCI and E-learning Design Principles

Educational Data mining

Data Analysis and Machine Learning Techniques

Construction and Application of Virtual Reality Teaching Resources

Educational Software and Big Data

Grants

National Natural Science Foundation of China (PI)

Research on Recognition of Confusion with BCI and Tow-way Regulation Strategies in Intelligent Tutoring System

National Natural Science Foundation of China (PI)

The workload and learning emotions recognition using EEG-based brain-computer interface and the evaluation as well as adjustment of cognitive states in adaptive e-learning

Natural Science Foundation of Shaanxi Province (PI)

Research on computer vision based attention recognition and intelligent interaction in E-learning

Selected Publications

Intelligent Interaction and Context-based IntelliSense system

Zhou, Y., Xu, T.

Xu,T., Wang,J., Zhang,G., Zhang,L., Zhou,Y.

Confused or not: decoding brain activity and recognizing confusion in reasoning learning using EEG.

Journal of Neural Engineering,IOP Publishing,20(2),2023,SCI二区,IF:5.043

(Abstract)

Objective. Confusion is the primary epistemic emotion in the learning process, influencing

students’ engagement and whether they become frustrated or bored. However, research on

confusion in learning is still in its early stages, and there is a need to better understand how to

recognize it and what electroencephalography (EEG) signals indicate its occurrence. The present

work investigates confusion during reasoning learning using EEG, and aims to fill this gap with a

multidisciplinary approach combining educational psychology, neuroscience and computer

science. Approach. First, we design an experiment to actively and accurately induce confusion in

reasoning. Second, we propose a subjective and objective joint labeling technique to address the

label noise issue. Third, to confirm that the confused state can be distinguished from the

non-confused state, we compare and analyze the mean band power of confused and unconfused

states across five typical bands. Finally, we present an EEG database for confusion analysis, together

with benchmark results from conventional (Naive Bayes, Support Vector Machine, Random Forest,

and Artificial Neural Network) and end-to-end (Long Short Term Memory, Residual Network, and

EEGNet) machine learning methods. Main results. Findings revealed: 1. Significant differences in

the power of delta, theta, alpha, beta and lower gamma between confused and non-confused

conditions; 2. A higher attentional and cognitive load when participants were confused; and 3.

The Random Forest algorithm with time-domain features achieved a high accuracy/F1 score

(88.06%/0.88 for the subject-dependent approach and 84.43%/0.84 for the subject-independent

approach) in the binary classification of the confused and non-confused states. Significance. The

study advances our understanding of confusion and provides practical insights for recognizing and

analyzing it in the learning process. It extends existing theories on the differences between confused

and non-confused states during learning and contributes to the cognitive-affective model. The

research enables researchers, educators, and practitioners to monitor confusion, develop adaptive

systems, and test recognition approaches.

Xu,T., Dang,W., Wang,J.,Zhou,Y.

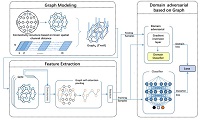

DAGAM: a domain adversarial graph attention model for subject-independent EEG-based emotion recognition.

Journal of Neural Engineering,IOP Publishing,20(1),2023,SCI二区,IF:5.043

(Abstract)

Objective. Due to individual differences in electroencephalogram (EEG) signals, the learning model

built by the subject-dependent technique from one person’s data would be inaccurate when applied

to another person for emotion recognition. Thus, the subject-dependent approach for emotion

recognition may result in poor generalization performance when compared to the

subject-independent approach. However, existing studies have attempted but have not fully utilized

EEG’s topology, nor have they solved the problem caused by the difference in data distribution

between the source and target domains. Approach. To eliminate individual differences in EEG

signals, this paper proposes the domain adversarial graph attention model, a novel EEG-based

emotion recognition model. The basic idea is to generate a graph using biological topology to

model multichannel EEG signals. Graph theory can topologically describe and analyze EEG

channel relationships and mutual dependencies. Then, unlike other graph convolutional networks,

self-attention pooling is used to benefit from the extraction of salient EEG features from the graph,

effectively improving performance. Finally, following graph pooling, the domain adversarial model

based on the graph is used to identify and handle EEG variation across subjects, achieving good

generalizability efficiently. Main Results. We conduct extensive evaluations on two benchmark data

sets (SEED and SEED IV) and obtain cutting-edge results in subject-independent emotion

recognition. Our model boosts the SEED accuracy to 92.59% (4.06% improvement) with the

lowest standard deviation (STD) of 3.21% (2.46% decrements) and SEED IV accuracy to 80.74%

(6.90% improvement) with the lowest STD of 4.14% (3.88% decrements), respectively. The

computational complexity is drastically reduced in comparison to similar efforts (33 times lower).

Significance. We have developed a model that significantly reduces the computation time while

maintaining accuracy, making EEG-based emotion decoding more practical and generalizable.

Zhou, Y., Xu, T., Yang, H., Li, S.

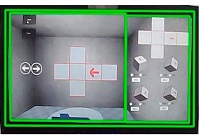

Improving Spatial Visualization and Mental Rotation Using FORSpatial Through Shapes and Letters in Virtual Environment.

IEEE Transactions on Learning Technologies,IEEE Education Society, 15(3):326-337,2022,SSCI一区,IF:4.433

(Abstract)

Existing research on spatial ability recognizes the critical role played by spatial visualization and mental rotation. Recent evidence suggests that external visualization and manipulation can boost spatial thinking.

The virtual environment provides an exciting opportunity so that many spatial ability training tasks based on reading printed illustrations can be migrated to a highly 3-D interactive and visualized environment. However, few studies have employed virtual reality (VR)

technology to improve spatial visualization and mental rotation. In addition, the design of training contents and corresponding VR applications are still lacking. In this work, we propose FORSpatial, a system mainly for spatial ability training in a virtual environment. First, in this article, we design a novel scheme and principles for generating tasks, involving spatial visualization

and mental rotation through flexible combinations of shapes and letters. Based on this, we create testing questions and a FORSpatial training application in desktop VR. FORSpatial and its components are made publicly available and free to use. To evaluate the performance of spatial training, verify the usability of the FORSpatial application, and analyze learning behavior, we organized a user study with 49 .participants, including an experimental group and a control group. The comparison between experimental and control groups shows the significant improvement of spatial skills through training. The analysis of interaction logging data and subjective comments reveals how FORSpatial supports spatial thinking.

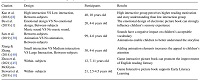

Pi, Z., Deng, L., Wang, X., Guo, P., Xu, T., Zhou, Y.

The influences of a virtual instructor's voice and appearance on learning from video lectures.

Journal of Computer Assisted Learning,Wiley, 38(6):1703-1713,2022,SSCI一区,IF:3.761

(Abstract)

Background: Video lectures which include the instructor's presence are becoming

increasingly popular. Presenting a real human does, however, entail higher financial

and time costs in making videos, and one innovative approach to reduce costs has

been to generate a virtual speaking instructor.

Objectives: The current study examined whether the use of a virtual instructor in

video lectures would facilitate learning as well as a human instructor, and whether

manipulating the virtual instructor's characteristics (i.e., voice and appearance) might

optimize the effectiveness of the virtual instructor.

Methods: Our study set four conditions. In the control condition, students watched a

human instructor. In the experiment conditions, students watched one of (a) a virtual

instructor which used the human instructor's voice and an AI image, (b) a virtual

instructor which spoke in an AI voice with an AI image made to speak using text-to-speech and lip synthesis techniques, or (c) a virtual instructor with used an AI voice

and an AI likable-image of an instructor.

Results and Conclusions: The AI likable instructor condition had a significant positive

effect on students' learning performance and motivation, without decreasing the

attention students paid to the learning materials.

Implications: Our findings suggest that instructional video designers can make use of

AI voices and AI images of likable humans as instructors to motivate students and

enhance their learning performance.

Xu, T., Zhou, Y., Hou, Z., Zhang, W.

Decode Brain System: A Dynamic Adaptive Convolutional Quorum Voting Approach for Variable-Length EEG Data.

Complexity,Wiley-Hindawi, 2020:1-9, 2020,SCI二区,IF:2.121

(Abstract)

The brain is a complex and dynamic system, consisting of interacting sets and the temporal evolution of these sets. Electroencephalogram (EEG) recordings of brain activity play a vital role to decode the cognitive process of human beings in learning

research and application areas. In the real world, people react to stimuli differently, and the duration of brain activities varies

between individuals. ,erefore, the length of EEG recordings in trials gathered in the experiment is variable. However, current

approaches either fix the length of EEG recordings in each trial which would lose information hidden in the data or use the sliding

window which would consume large computation on overlapped parts of slices. In this paper, we propose TOO (Traverse Only

Once), a new approach for processing variable-length EEG trial data. TOO is a convolutional quorum voting approach that breaks

the fixed structure of the model through convolutional implementation of sliding windows and the replacement of the fully

connected layer by the 1 × 1 convolutional layer. Each output cell generated from 1 × 1 convolutional layer corresponds to each

slice created by a sliding time window, which reflects changes in cognitive states. ,en, TOO employs quorum voting on output

cells and determines the cognitive state representing the entire single trial. Our approach provides an adaptive model for trials of

different lengths with traversing EEG data of each trial only once to recognize cognitive states. We design and implement a

cognitive experiment and obtain EEG data. Using the data collecting from this experiment, we conducted an evaluation to

compare TOO with a state-of-art sliding window end-to-end approach. ,e results show that TOO yields a good accuracy

(83.58%) at the trial level with a much lower computation (11.16%). It also has the potential to be used in variable signal processing

in other application areas.

Zhou, Y., Xu, T., Li, S., Shi R.

Beyond Engagement: an EEG-based Methodology for Assessing User's Confusion in Educational Game.

Universal Access in the Information Society, Springer,18(3):551–563, 2019,SCI三区,IF:2.629

(Abstract)

Confusion is an emotion, which may occur when the learner is confronting inconsistence between new knowledge and existing cognitive structure, or reasoning for solving the puzzle and problem. Although confusion is not pleasant, it is necessary

for the learner to engage in understanding and deep learning. Consequently, confusion assessment has attracted increased

interest in e-learning. However, current studies have targeted no further than engagement detection and measurement, while

there is lack of studies in investigating cognitive and emotional aspects beyond engagement in the context of game-based

learning. To quantify confused states in logic reasoning in game-based learning, we propose an EEG-based methodology

for assessing the user’s confusion using the OpenBCI device with 8 channels. In the complicated context of game play, it is

difcult, and sometimes impossible, to collect the ground truth of the data in real tasks. To solve this issue, this work leverages cross-task and cross-subject methods to build a classifer, that is, training on the data of one standardized cognitive test

paradigm (Raven’s test) and testing on the data of real tasks in game play (Sokoban Game). It provides a new possibility

to create a classifer based on a small dataset. We also employ the end-to-end algorithm of deep learning in machine learning. Results showed the feasibility of this proposal in the task variation of the classifer, with an accuracy of 91.04%. The

proposed EEG-based methodology is suitable to analyze learners’ confusion on the long game-play duration and has a good

adaption in real tasks.

Zhou, Y., Qi L., Raake A., Xu T.,Piekarska M., Zhang X.

User Attitudes and Behaviors towards Personalized Control of Privacy Settings on Smartphones.

Concurrency and Computation Practice and Experience, Wiley,31(22):e4884, 2018,SCI三区,IF:1.831

(Abstract)

The fine-grained access control has been proved to be a reliable tool to ensure preserving of privacy of end users. In fog computing, one of the challenges is to understand users' attitudes and

behaviors toward personalized control. However, few of studies have given a clear view on users'

perception of the burden of interactivity when they set complex privacy settings. To this end, we

conducted a user study including a lab study with 26 participants and an evaluation with 223

participants. From the lab study, we found that participants were satisfied with improved privacy settings but did not adapt well to complex personalized interfaces. We proposed effective

methods to assist users to balance between the full control and the additional interaction burden,

including sorting, recommendations, and establishing profiles. After this lab study, we organized

a survey evaluation additionally to explore users' current usage of privacy features. Results from

the evaluation showed that the principle reason that users failed to use privacy features was

that they were not appropriately aware of these features. A key conclusion is that privacy settings should not only let users take over the control of smartphones but also inform them of the

knowledge on privacy practices.

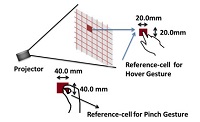

Zhou, Y., Xu, T., David, B., Chalon, R.

Interaction on-the-go: a fine-grained exploration on wearable PROCAM interfaces and gestures in mobile situations.

Universal Access in the Information Society,Springer,15(2):1-15, 2015,SCI三区,IF:2.629

(Abstract)

Wearable projector and camera (PROCAM)

interfaces, which provide a natural, intuitive and spatial

experience, have been studied for many years. However,

existing hand input research into such systems revolved

around investigations into stable settings such as sitting or

standing, not fully satisfying interaction requirements in

sophisticated real life, especially when people are moving.

Besides, increasingly more mobile phone users use their

phones while walking. As a mobile computing device, the

wearable PROCAM system should allow for the fact that

mobility could influence usability and user experience.

This paper proposes a wearable PROCAM system, with

which the user can interact by inputting with finger gestures like the hover gesture and the pinch gesture on projected surfaces. A lab-based evaluation was organized,

which mainly compared two gestures (the pinch gesture

and the hover gesture) in three situations (sitting, standing

and walking) to find out: (1) How and to what degree does

mobility influence different gesture inputs? Are there any

significant differences between gesture inputs in different

settings? (2) What reasons cause these differences? (3)

What do people think about the configuration in such

systems and to what extent does the manual focus impact

such interactions? From qualitative and quantitative points

of view, the main findings imply that mobility impacts

gesture interactions in varying degrees. The pinch gesture

undergoes less influence than the hover gesture in mobile

settings. Both gestures were impacted more in walking

state than in sitting and standing states by all four negative

factors (lack of coordination, jittering hand effect, tired

forearms and extra attention paid). Manual focus influenced mobile projection interaction. Based on the findings,

implications are discussed for the design of a mobile projection interface with gestures.

Zhou, Y., Xu, T., David, B., Chalon, R.

Innovative Wearable Interfaces: An Exploratory Analysis of Paper-based Interfaces with Camera-glasses Device Unit.

Personal and Ubiquitous Computing, Springer,18(4):835-849, 2013,SCI二区,IF:3.006

(Abstract)

The new ubiquitous interaction methods change

people’s life and facilitate their tasks in everyday life and in

the workplace, enabling people to access their personal data as

well as public resources at any time and in any place. We

found two solutions to enable ubiquitous interaction and put a

stop to the limits imposed by the desktop mode: namely

nomadism and mobility. Based on these two solutions, we

have proposed three interfaces (Zhou et al. in HCI international 2011: human–computer interaction. Interaction techniques and environments, Springer, Berlin, pp 500–509,

2011): in-environment interface (IEI), environment dependent interface (EDI), and environment independent interface

(EII). In this paper, we first discuss an overview of IEI, EDI,

and EII, before excluding IEI and focusing on EDI and EII,

their background, and distinct characteristics. We also propose a continuum from physical paper-based interface to

digital projected interface in relation with EDI and EII. Then,

to validate EDI and EII concepts, we design and implement a

MobilePaperAccess system, which is a wearable cameraglasses system with paper-based interface and original input

techniques allowing mobile interaction. Furthermore, we

discuss the evaluation of the MobilePaperAccess system; we

compare two interfaces (EDI and EII) and three input techniques (finger input, mask input, and page input) to test the

feasibility and usability of this system. Both the quantitative

and qualitative results are reported and discussed. Finally, we

provide the prospects and our future work for improving the

current approaches.

Xu, T. Zhou, Y., Zhu, J

New Advances and Challenges of Fall Detection Systems: A Survey.

Applied Science,MDPI, 8(3):418, 2018,SCI二区,IF:2.838

(Abstract)

Falling, as one of the main harm threats to the elderly, has drawn researchers’ attentions

and has always been one of the most valuable research topics in the daily health-care for the elderly

in last two decades. Before 2014, several researchers reviewed the development of fall detection,

presented issues and challenges, and navigated the direction for the study in the future. With smart

sensors and Internet of Things (IoT) developing rapidly, this field has made great progress. However,

there is a lack of a review and discussion on novel sensors, technologies and algorithms introduced

and employed from 2014, as well as the emerging challenges and new issues. To bridge this gap,

we present an overview of fall detection research and discuss the core research questions on this

topic. A total of 6830 related documents were collected and analyzed based on the key words.

Among these documents, the twenty most influential and highly cited articles are selected and

discussed profoundly from three perspectives: sensors, algorithms and performance. The findings

would assist researchers in understanding current developments and barriers in the systems of

fall detection. Although researchers achieve fruitful work and progress, this research domain still

confronts challenges on theories and practice. In the near future, the new solutions based on advanced

IoT will sustainably urge the development to prevent falling injuries.

Xu, T. Zhou, Y.

Elders’ fall detection based on biomechanical features using depth camera.

International Journal of Wavelets, Multiresolution and Information Processing, World Scientific Publishing Company,16(2):1840005, 2018,SCI四区,IF:1.276

(Abstract)

An accidental fall poses a serious threat to the health of the elderly. With the advances

of technology, an increased number of surveillance systems have been installed in the

elderly home to help medical staffs find the elderly at risk. Based on the study of human

biomechanical equilibrium, we proposed a fall detection method based on 3D skeleton

data obtained from the Microsoft Kinect. This method leverages the accelerated velocity

of Center of Mass (COM) of different body segments and the skeleton data as main

biomechanical features, and adopts Long Short-Term Memory networks (LSTM) for fall

detection. Compared with other fall detection methods, it does not require older people

to wear any other sensors and can protect the privacy of the elderly. According to the

experiment to validate our method using the existing database, we found that it could

efficiently detect the fall behaviors. Our method provides a feasible solution for the fall

detection that can be applied at homes of the elderly.

Xu, T. Zhou, Y.

Fall prediction based on biomechanics equilibrium using Kinect.

International Journal of Distributed Sensor Networks,SAGE, 13(4) ,2017,SCI三区,IF:1.938

(Abstract)

The fall is one of the most important research fields of solitary elder healthcare at home based on Internet of Things

technology. Current studies mainly focus on the fall detection, which helps medical staffs bring a fallen elder out of danger in time. However, it neither predicts a fall nor provides an effective protection against a fall. This article studies the

fall prediction based on human biomechanics equilibrium and body posture characteristics through analyzing three-dimensional skeleton joints data from the depth camera sensor Kinect. The research includes building a human bionic

mass model using skeleton joints data from Kinect, determining human balance state, and proposing a fall prediction

algorithm based on recurrent neural networks by unbalanced posture features. We evaluate the model and algorithm on

an open database. The performance indicates that the fall prediction algorithm by studying human biomechanics can predict a fall (91.7%) and provide a certain amount of time (333 ms) before the elder injuring (hitting the floor). This work

provides a technical basis and a data analytics approach for the fall protection.

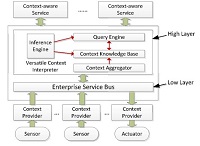

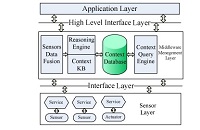

Xu, T. Zhou, Y., David, B., Chalon, R.

A smart brain: an intelligent context inference engine for context-aware middleware.

International Journal of Sensor Networks,Inderscience, 22(3):145-157, 2016,SCI四区,IF:1.264

(Abstract)

Recently, ‘activity’ context draws increased attention from researchers in context

awareness. Existing context-aware middleware usually employ the rule-based method to, which

is easy to build and also intuitive to work with. However, this method is fragile, not flexible

enough, and is inadequate to support diverse types of tasks. In this paper, we surveyed the related

literature in premier conferences over the past decade, reviewed the main activity context

recognition methods, and summarised their three main facets: basic activity inference, dynamic

activity analysis, and future activity recommendation. Based on our previous work, we then

proposed an intelligent inference engine for our context-aware middleware. Besides satisfying

requirements for checking context consistency, our inference engine integrates the three methods

for activity context recognition to provide a solution for all facets of activity context recognition

based on our context-aware middleware.

Zhang,L., Guo,Y.,Ma,Q., Zhou,Y.

An Exploratory Study on Learning in 3D Multi-user Virtual Environments.

2023 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops(VRW),IEEE,EI

Chen,Q.,Zhang,L.,Dong,B.,Zhou,Y.

Interactive Cues on Geometry Learning in a Virtual Reality Environment for K-12 Education.

2023 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops(VRW),IEEE,EI

Deng, L., Zhou, Y., Cheng, T., Liu, X., Xu, T., Wang, X.

My English Teachers Are Not Human but I Like Them: Research on Virtual Teacher Self-study Learning System in K12.

International Conference on Human-Computer Interaction, Springer, Cham, 13329:176-187, 2022,EI

(Abstract)

English is the most used second language in the world. Mastering vocabulary is the prerequisite for learning English well. In middle

school, students direct their own studying outside the classroom to facilitate memorizing and understanding. However, the lack of teacher guidance in the self-study process will negatively affect learning. Producing

video lecture materials including talking teachers costs time, energy, and

money. The development of artificial intelligence makes it possible to create such materials automatically. However, it is still unclear whether a

system embedded with AI-generated virtual teachers can facilitate self-study compared with the system with merely the speech in self-study

in K12. To address this gap, we conducted a user study with 56 high

school students, collecting learning outcomes, user experience, and learning experience. Results showed that the virtual teacher helps to improve

the student’s English learning performance both in retention and transfer. Participants reported high user and learning experience. Our findings

shed light on the use of virtual teachers for self-study students in K12.

Bai, J., Zhang, H., Chen, Q., Cheng, X., Zhou, Y.

Technical Supports and Emotional Design in Digital Picture Books for Children: A Review.

The 13th International Conference on Ambient Systems, Networks and Technologies, Elsevier B.V.,201: 174-180, 2022,EI

(Abstract)

In recent years, the digital picture book has been an increasingly important reading and writing medium for children. Research

on the effects of digital picture books on learning has produced mixed results. As a potential tool, some researchers found that

such books could foster and scaffold for developing emergent literacy in the early childhood education. However, some still have

the skeptical attitude toward integrating the technology in picture books. This survey reviews techniques and emotional design that

have been applied in picture books. We also compared and discussed different types of picture books.

Zhang, L., Liu, X., Chen, Q., Zhou, Y., Xu, T.

EyeBox: A Toolbox based on Python3 for Eye Movement Analysis.

The 13th International Conference on Ambient Systems, Networks and Technologies, Elsevier B.V.,201: 166-173, 2022,EI

(Abstract)

Eye tracking technology can reflect human attention and cognition, widely used as a research tool. To analyze eye movement data,

users need to determine a specific area known as areas of interests (AOIs). Although existing tools offer dynamic AOIs functions to

process visual behavior on moving stimuli, they may ask users to use markers to specify contours of moving stimuli in the physical

environment or define AOIs manually on screen. This paper proposes a toolbox named Eyebox to 1) recognize dynamic AOIs

automatically based on SIFT and extract eye movement indicators, as well as 2) draw fixations. We also design a user-friendly

interface for this toolbox. Eyebox currently supports processing data recorded from the Pupil Core device. We compared results

processed by manual with by Eyebox in a custom eye-tracking dataset to evaluate this toolbox. The accuracy of 3/4 data for AOI1

is above 90%, and the accuracy of 4/5 data for AOI2 is higher than 90%. Finally, we conducted a user study to test the usability

and user experience of EyeBox.

Tao, Y., Zhang, G., Zhang, D., Wang, F., Zhou, Y., Xu, T.

Exploring Persona Characteristics in Learning: A Review Study of Pedagogical Agents.

The 13th International Conference on Ambient Systems, Networks and Technologies, Elsevier B.V.,201: 87-94, 2022,EI

(Abstract)

In digital interactive learning environments, pedagogical agents are digital characters that assist instruction, providing learners

with coaching, feedback, as well as emotional and social support. Recently, increased work has explored how animated, actual, or

artificial pedagogical agents impact learning. Some studies found that agents promote learning, while some revealed that agents

increased the workload and caused learners to lose focus. Especially poorly designed pedagogical agents would aggravate learners’

external cognitive load, negatively affecting learning performance and experience. This work reviewed how features of pedagogical agent roles affect learners’ retention and transfer performance, as well as the learning experience. It extracted five features,

including appearance, gender, facial expression, sound, and movements, then discussed their impact. The survey concludes with

the implications for designing effective agents.

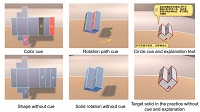

Chen, Q., Deng, L., Xu, T., Zhou, Y.

Visualized Cues for Enhancing Spatial Ability Training in Virtual Reality.(PPT)

2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops , IEEE, 299-300, 2022,EI

(Abstract)

There is a growing research interest in training spatial ability in Virtual Reality (VR), which could provide a fully interactive learning

environment. However, existing spatial ability VR training systems

lack cues to assist mental rotation and visualization. Learners may

suffer from insufficient assistance to discover spatial reasoning rules

in the training process. The aims of most visual cues for pedagogical

purposes are to draw the viewer’s attention, which can not meet

the requirements of spatial training tasks. To address this issue, we

propose three cues for learners to succeed in spatial tasks: color,

rotation path, and circle. These visual cues provide supplementary

spatial information and just-in-time explanations. They help distinguish the different parts of the solid, visualize the spatial information

like rotation and direction, and emphasize the key points to solve

the problems.

Liu, X., Zhang, S., Xu, T., Zhou, Y.

Improving Language Learning by an Interact-to-Learn Desktop VR Application: A Case Study with Peinture. (PPT)

2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops, IEEE, 267-270, 2022,EI

(Abstract)

Mastering vocabulary is a daunting task for second language learners. Desktop Virtual Reality (VR) can inspire and engage learners

with interact-to-learn activities to facilitate memorizing vocabulary.

This paper proposes a desktop VR application for color vocabulary

learning called Peinture. Peinture consists of a lecturing video and

an interactive coloring virtual space. The talking teacher in the lecturing video is generated automatically through text-to-speech and

lip synthesis approaches. Learners can paint and interact in a theme

when or after lecture learning. A preliminary user study based on

performance, self-report and eye tracking data analysis shows that

interact-to-learn activities provided by Peinture were beneficial for

second language learning. However, there is still considerable room

to upgrade such educational VR.

Zhang, G., Li, S., Wang, J., Zhou, Y., Xu, T.

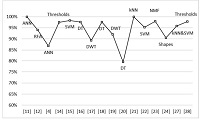

A Semi-automatic Feature Fusion Model for EEG-based Emotion Recognition.

2021 27th International Conference on Mechatronics and Machine Vision in Practice, IEEE,726-731, 2021,EI

(Abstract)

Electroencephalogram (EEG) is usually used to

study cognitive activities, which have different temporal,

frequency-domain features. Scientists attempted to find crucial

features to improve recognition accuracy but challenging. This

paper proposed a novel confused emotion recognition method

based on EEG, which combine automatic feature extraction

(deep learning) and knowledge-based feature extraction. To

evaluate our method, we designed an experiment to collect

data, the basic idea of which is to induce the confused emotion

based on the English listening test. The results show that

our method performs better in experiments than Convolution

Neural Networks(CNN) and Support Vector Machine (SVM).

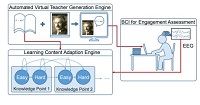

Xu, T., Wang, X., Wang, J., Zhou, Y.

From Textbook to Teacher: an Adaptive Intelligent Tutoring System Based on BCI.

2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society,IEEE, 7621-7624, 2021,EI

(Abstract)

In this work, we propose FT3

, an adaptive intelligent tutoring system based on Brain Computer Interface(BCI).

It can automatically generate different difficulty levels of

lecturing video with teachers from textbook adapting to student

engagement measured by BCI. Most current studies employ

animated images to create pedagogical agents in such adaptive

learning environments. However, evidence suggests that human

teacher video brings a better learning experience than animated

images. We design a virtual teacher generation engine consisting

of text-to-speech (TTS) and lip synthesis method, being able

to generate high-quality adaptive lecturing clips of talking

teachers with accurate lip sync merely based on a textbook

and teacher’s photo. We propose a BCI to measure engagement,

serving as an indicator for adaptively generating appropriate

lecturing videos. We conduct a preliminary study to build and

evaluate FT3

. Results verify that FT3 can generate synced

lecturing videos, and provide proper levels of learning content

with an accuracy of 73.33%.

Xu, T., Zhou, Y., Wang Y., Zhao Z., Li S.

Guess or Not? A Brain-Computer Interface Using EEG Signals for Revealing the Secret behind Scores.(VEDIO)

CHI EA 2019 - Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems, ACM,LBW1210:1-6,2019,EI

(Abstract)

Now examinations and scores serve as the main criterion for a student’s academic performance.

However, students use guessing strategies to improve the chances of choosing the right answer in a

test. Therefore, scores do not reflect actual levels of the student’s knowledge and skills. In this paper,

we propose a brain-computer interface (BCI) to estimate whether a student guesses on a test question

or masters it when s/he chooses the right answer in logic reasoning. To build this BCI, we first define the “Guessing” and employ Raven’s Progressive Matrices as logic tests in the experiment to collect

EEG signals, then we propose a sliding time-window with quorum-based voting (STQV) approach to

recognize the state of “Guessing” or “Understanding”, together with FBCSP and end-to-end ConvNet

classification algorithms. Results show that this BCI yields an accuracy of 83.71% and achieves a good

performance in distinguishing “Guessing” from “Understanding”.

Zhou, Y., Xu, T., Li S., Li S.

Confusion State Induction and EEG-based Detection in Learning English.

In 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, IEEE,3290-3293, 2018,EI

(Abstract)

Confusion, as an affective state, has been proved

beneficial for learning, although this emotion is always mentioned as negative affect. Confusion causes the learner to

solve the problem and overcome difficulties in order to restore

the cognitive equilibrium. Once the confusion is successfully

resolved, a deeper learning is generated. Therefore, quantifying

and visualizing the confusion that occurs in learning as well

as intervening has gained great interest by researchers. Among

these researches, triggering confusion precisely and detecting it

is the critical step and underlies other studies. In this paper, we

explored the induction of confusion states and the feasibility

of detecting confusion using EEG as a first step towards

an EEG-based Brain Computer Interface for monitoring the

confusion and intervening in the learning. 16 participants

EEG data were recorded and used. Our experiment design

to induce confusion was based on tests of Raven’s Standard

Progressive Matrices. Each confusing and not-confusing test

item was presented during 15 seconds and the raw EEG data

was collected via Emotiv headset. To detect the confusion

emotion in learning, we propose an end-to-end EEG analysis

method. End-to-end classification of Deep Learning in Machine

Learning has revolutionized computer vision, which has gained

interest to adopt this method to EEG analysis. The result of

this preliminary study was promising, which showed a 71.36%

accuracy in classifying users’ confused and unconfused states

when they are inferring the rules in the tests.

Xu, T., Zhou, Y., Wang Z., Peng, Y.

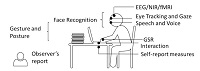

Learning Emotions EEG-based Recognition and Brain Activity: A Survey Study on BCI for Intelligent Tutoring System.

The 9th International Conference on Ambient Systems, Networks and Technologies ,Elsevier B.V., 130:376-382, 2018,EI

(Abstract)

Learners experience emotions in a variety of valence and arousal in learning, which impacts the cognitive process and the success of

learning. Learning emotions research has a wide range of benefits from improving learning outcomes and experience in Intelligent

Tutoring System (ITS), as well as increasing operation and work productivity. This survey reviews techniques that have been used

to measure emotions and theories for modeling emotions. It investigates EEG-based Brain-Computer Interaction (BCI) of general

and learning emotion recognition. The induction methods of learning emotions and related issues are also included and discussed.

The survey concludes with challenges for further learning emotion research.

Zhou, Y., Xu, T., Zhi X., Wang Z.

Learning in Doing: A Model of Design and Assessment for Using New Interaction in Educational Game.

International Conference on Learning and Collaboration Technologies LCT 2018: Learning and Collaboration Technologies, Learning and Teaching, Springer, Cham,10925:225-236, 2018,EI

(Abstract)

To put into practice what has been learned is considered as one of the most important education objectives. In the traditional class, it is difficult for learners to engage in practices since teachers usually convey knowledge and experience by speaking or simple demonstrations, like using slides and videos. This brings obstacles for learners to apply what they have learned to solve real problems. Educational game with the novel input and out technologies is one of the solutions for learners to engage in the learning activities. It can not only effectively encourage learners to learn positively and vividly, promote learning interests and motivation, and enhance the engagement, but also improve their imagination, learning performance and other learning behaviors. This paper first discusses the learning theories related to educational game. Then a literature review is conducted by collecting data on the topics of assessment of educational game with new interaction. Next, we propose a model to guide the design and the evaluation of educational game due to the missing studies. This model underlies an educational game to foster the garbage classification learning with virtual 3D output and natural gesture input using Leap Motion, which is a tracking device of hands and objects. To analyze users’ learning behaviors and evaluate their performance and experience, we conduct an evaluation with 22 college learners. Results showed that the use of the natural interaction not only made the learning interesting and fostered the engagement, but also improved the absorption of knowledge in practices. Finally, a discussion of the challenges and future directions is presented.

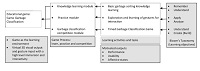

Zhou, Y., Ji S., Xu, T., Wang Z.

Promoting Knowledge Construction: A Model for Using Virtual Reality Interaction to Enhance Learning.

The 9th International Conference on Ambient Systems, Networks and Technologies,Elsevier B.V., 130:239-246, 2018,EI

(Abstract)

VR technologies, offering powerful immersion and rich interaction, have gained great interest from researchers and practitioners in the field of education. However, current learning theories and models either mainly take into account the technology perspectives,

or focus more on the pedagogy. In this paper, we propose a learning model benefiting from both the Human-Computer Interaction

aspects and pedagogical aspects. This model takes full account of the impact of different factors including pedagogical contexts,

VR roles and scenarios, and output specifications, which would be combined to inform the design and realize VR education

applications. Based on this model, we design and implement an educational application of computer assembly under virtual

reality using HTC Vive, which is a headset providing immersion experience. To analyze users’ learning behaviors and evaluate

their performance and experience, we conduct an evaluation with 32 college students as participants. We design a questionnaire

including usability tests and emotion state measures. Results showed that our proposed learning mode gave a good guidance for

informing the design and use of VR-supported learning application. The use of the natural interaction not only makes the learning

interesting and fosters the engagement, but also improves the construction of knowledge in practices.

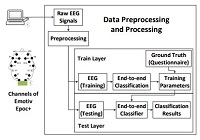

Zhou, Y., Xu, T., Cai, Y., Wu, X., Dong B.

Monitoring cognitive workload in online videos learning through an EEG-based brain-computer interface.

International Conference on Learning and Collaboration Technologies LCT 2017: Learning and Collaboration Technologies, Learning and Teaching,Springer, Cham, 10295:64-73, 2017,EI

(Abstract)

Student cognitive state is one of the crucial factors determing

successful learning [1]. The research community related to education and

computer science has developed various approches for describing and monitoring

learning cognitive states. Assessing cognitive states in digital environment makes

it possible to supply adaptive instruction and personalized learning for student.

This assessment has the same function as the instructor in a real-world classroom

observing and adjusting the speed and contents of the lecture in line with students’

cognitive states. The goal is to refocus students’ interest and engagement, making

the instruction efficiently. In recent years, increased researches have focused on

various measures of cognitive states, among which physiological measures are

able to monitor in a real-time, especially electroencephalography (EEG) based

brain activity measures. The cognitive workload that students experience while

learning instructional materials determines success in learning. In this work, we

design and propose a real-time passive Brain-Computer Interaction (BCI) system

to monitor the cognitive workload using EEG-based headset Emotiv Epoc+,

which is feasible for working in the online digital environment like Massive Open

Online Courses (MOOCs). We choose two electrodes to pick up original EEG

signals, which are highly relevant to the workload. The current prototype is able

to record EEG signals and classify levels of cognitive load when students

watching online course videos. This prototype is based on two layers, using

machine learning approaches for classification.

Xu, T. Zhou, Y., David, B., Chalon, R.

Supporting Activity Context Recognition in Context-aware Middleware.

Workshops at the Twenty-Seventh AAAI Conference on Artificial Intelligence (AAAI’13), Bellevue, Washington, USA, 61-70,(2013),AI Access Foundation,EI

(Abstract)

Context-aware middleware is considered as an efficient solution to develop context-aware application, which provides a feasible development platform integrating various sensors and new technologies. With the development of sensors, the research work on the context shift from “location” to “activity” gradually. Then it puts forward a new requirement for context-aware middleware: activity context recognition. In this paper, we first survey the related literature in premier conferences over the past decade, review the main methods of activity context recognition, and conclude its three main facets: basic activity inference, dynamic activity analysis and future activity recommendation. We then propose an intelligent inference engine integrating three popular methods solving three facets mentioned above respectively, which is based on our context-aware middleware. Finally, to evaluate the feasibility of our proposal, we implement two scenarios: bus stop scenario and domestic activity application.

Xu, T., David, B., Chalon, R., Zhou, Y.

A Context-aware Middleware for Ambient Intelligence.

ACM/IFIP/USENIX 12th International Middleware Conference (Middleware 2011), Poster session, Lisbon, Portugal,10:1-2, 2011,ACM,EI

(Abstract)

By the acronym MOCOCO we refer to our view of ambient

intelligence (AmI), pointing out three main characteristics:

Mobility, Contextualization and Collaboration. The ambient

intelligence is a challenging research area focusing on ubiquitous

computing, profiling practices, context awareness, and human-centric computing and interaction design. We are concretizing our

approach in a platform called IMERA (French acronym for

Computer Augmented Environment for Mobile Interaction). In

order to make work together several sensors, actuators and mobile

smart devices, the need of a context-aware middleware for this

platform is obvious. In this paper, we present main objectives and

solution principles concretized in a context-aware middleware

based on hybrid reasoning engine (the ontology reasoning and the

decision tree reasoning), which retrieves efficiently high-level

contexts from raw data. This platform provides an environment

for rapid prototyping of context aware services in Ambient

Intelligent (AmI).

notice board

DATABASE AND TOOLS

Confusion EEG DB